Docker 101: Unveiling Key Terminologies and the Pre-Docker Era of Server Management

PART I

EVOLUTION OF VIRTUALIZATION AND CONTAINERIZATION

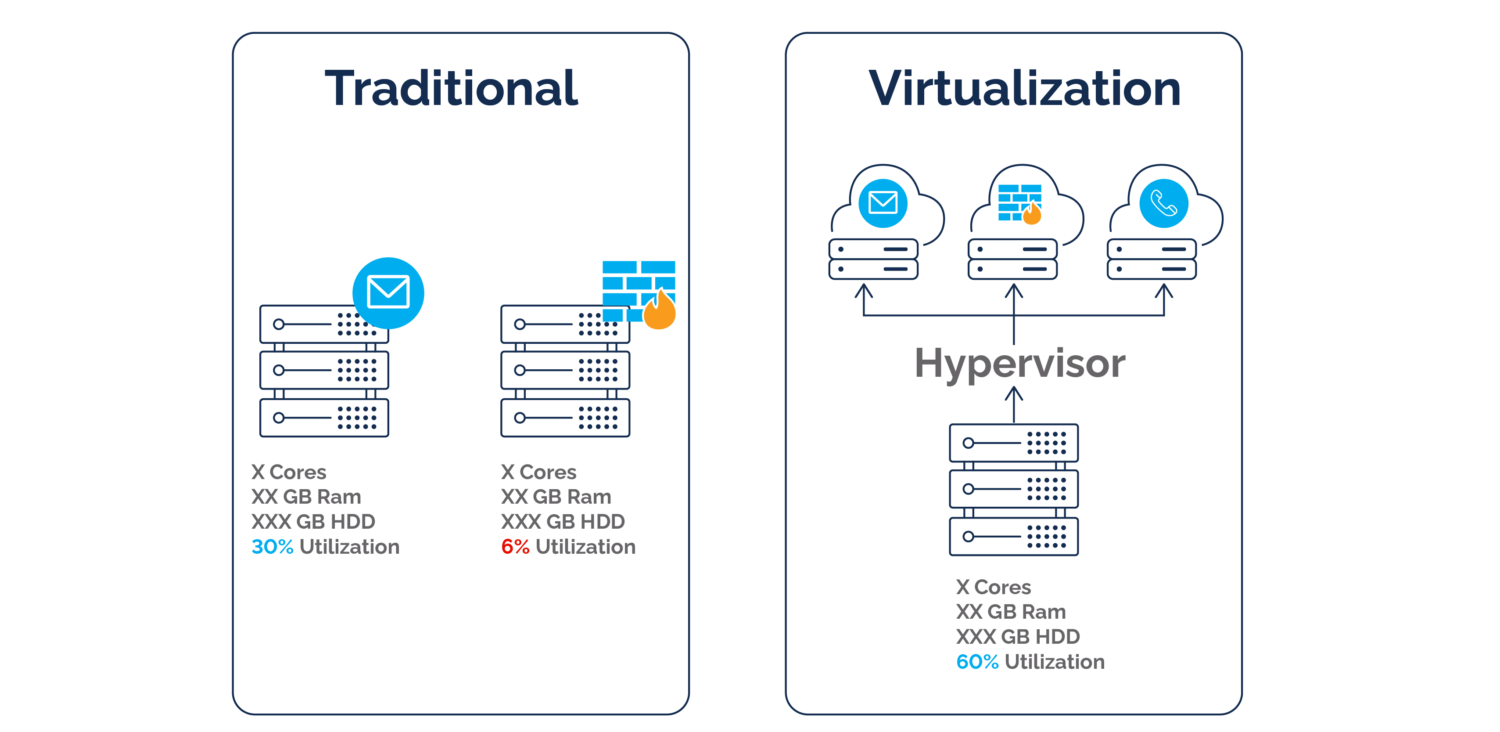

Stage 1: Dedicated Servers

- Problem: Each application ran on a dedicated server, resulting in inefficiency, environmental impact, and difficulties in handling traffic spikes.

Stage 2: Introduction of Virtual Machines (VMs)

Solution: VMware introduced virtualization technology with VMs, allowing multiple servers to run on the same hardware. It provided isolation but had some drawbacks.

Problem 1: Each VM requires its own operating system, consuming additional resources.

Problem 2: Running servers with different OSes led to wasted space due to OS duplication.

Problem 3: Compatibility and dependency issues often result in "works on my machine" problems.

Problem 4: Migration and setup were time-consuming.

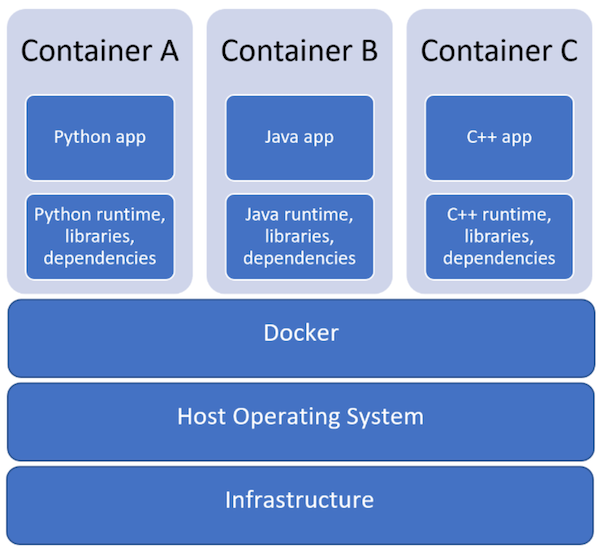

Stage 3: Containerization (Introduction of Docker)

Solution: Docker introduced containerization, running multiple applications on the same OS.

Docker aimed to democratize containerization and make it accessible to a wide range of developers.

Challenges Before Docker:

Manual Configuration: Software deployment involves manual server configuration and dependency setup.

Borrowed Servers: Borrowing servers from providers was common but risky in case of server failure.

Common Practices and Tools Before Docker:

Configuration Management Tools: Tools like Puppet, Chef, and Ansible were used for automation but had limitations.

Binary Distributions: Software applications were often distributed as binary packages.

Custom Scripting: Custom scripts were created for deployment, varying in quality and reliability.

Environment Variables: Environment variables and configuration files were used but this could lead to issues.

Compatibility and Dependency Challenges: Different projects had varying library and runtime requirements, leading to conflicts.

Limited Portability: Applications often face compatibility issues when moved to different environments.

Docker's Impact:

Introduction of Containerization: Docker introduced containerization, simplifying software deployment.

Lightweight and Consistent: Docker containers provide a lightweight and consistent environment, making dependency management easier.

Improved Compatibility: Docker containers ensured applications ran consistently across different environments.

Enhanced Efficiency: Docker significantly improved resource utilization, portability, and reliability.

Revolutionized Software Deployment: Docker revolutionized the packaging, distribution, and execution of applications, addressing the challenges presented by previous methods.

VIRTUALISATION

Virtualization is a technology that enables the creation of virtual, rather than physical, instances of computing resources, such as servers, storage, or networks. These virtualized resources are abstracted from the underlying physical hardware, making it easier to manage and utilize computing resources efficiently. Virtualization has various applications, including server virtualization, storage virtualization, and network virtualization, and it's commonly used in data centres and cloud computing environments to optimize resource usage and improve scalability and flexibility.

VIRTUAL MACHINE

A virtual machine (VM) is a software-based computer emulation that operates within a physical computer. It enables the simultaneous running of multiple independent operating systems on a single hardware system,

This technology optimizes hardware utilization, reduces costs, and provides isolation for diverse applications.

A virtual machine provides a dedicated virtual space or server with allocated RAM, allowing you to run an application on a host operating system within a physical server.

HyperVisor:

A hypervisor is computer software, firmware or hardware that creates and runs virtual machines. A hypervisor uses native hardware to share and manage access to virtual machines.

The main functions of a hypervisor are:

Resource management - It allocates CPU, memory, storage and networking resources to virtual machines.

Hardware abstraction - It abstracts the physical hardware and presents virtual hardware interfaces to the guest VMs.

Virtual machine management - It controls the creation, execution and termination of virtual machines.

Performance isolation - It ensures that one VM does not impact the performance of other VMs.

Security - It isolates the guest VMs and provides security controls.

TYPES OF HYPERVISOR

A hypervisor can run directly on hardware (Type 1) or on top of an operating system (Type 2). Type 1 hypervisors have better performance since they have direct access to the hardware.

CONTAINER

A container, in the context of computing and software development, refers to a lightweight, standalone, and executable software package that includes everything needed to run a piece of software, including the code, runtime, system tools, libraries, and settings. Containers are used for application virtualization, allowing software to run consistently and reliably across different computing environments, such as development, testing, and production.

A container encapsulates an application with its own operating environment. It can be placed on any host machine without special configuration, removing the issue of dependencies. VM is hardware virtualization, whereas containerization is OS virtualization. In comparison, virtualization is the creation of a virtual version of something such as an operating system, server or a storage device or network resources. Essentially, containerization is a lightweight approach to virtualization.

Key characteristics of containers include:

Isolation: Containers provide a level of isolation, which means that the software within a container is isolated from the host system and from other containers. This isolation helps prevent conflicts and ensures that applications run consistently.

Portability: Containers are designed to be highly portable. You can create a container image on one system and run it on another without significant modification. This portability is one of the reasons containers are popular in cloud computing environments.

Efficiency: Containers are typically more efficient in terms of resource usage compared to traditional virtual machines. They share the host OS kernel, which reduces overhead.

Ease of Deployment: Containers are easy to deploy and scale. Container orchestration platforms like Kubernetes help manage large numbers of containers in a clustered environment.

Version Control: Container images can be versioned and stored in container registries, allowing for easy updates and rollbacks.

No Need for an OS: Containers do not require a separate operating system for each instance, unlike traditional virtualization that uses full virtual machines (VMs) with their own OS. This leads to significant resource savings.

Portability: Containers package everything needed to run an application, including code, runtime, libraries, and settings, into a standalone package. This makes them highly portable and consistent across different environments.

Application Encapsulation: Containers encapsulate an application with its own operating environment, ensuring that it runs consistently and reliably. This removes the issues related to dependencies and compatibility.

Lightweight: Containers are lightweight compared to VMs, which are relatively heavy due to the inclusion of full OS installations.

OS Virtualization: Containerization is a form of OS virtualization, that focuses on isolating applications and their dependencies while sharing the host OS kernel. This contrasts with hardware virtualization used in VMs.

Efficiency: Containerization offers efficient resource utilization and improved performance, as containers share the underlying host OS kernel.

The most widely used containerization technology is Docker, which popularized the concept of containers. However, there are alternative container runtimes and orchestration tools like containerd, Podman, and Kubernetes.

Containers have become a fundamental building block for modern software development, enabling developers to package their applications and their dependencies into a consistent and reproducible format. They are used for microservices architectures, continuous integration and deployment (CI/CD), and many other scenarios where consistency, portability, and scalability are important.

DOCKER

Docker is a container platform used for developing, testing, and deploying applications.

It operates on a client-server architecture, where developers define application dependencies in a Dockerfile to create Docker images define a Docker container. This approach guarantees consistent application performance across different environments.

Docker serves as a tool for creating, managing, and scaling containers, ensuring application portability and reliability.

Why use Docker?

Using Docker can help you ship your code faster, give you control over your applications. You can deploy applications on containers that make it easier for them to be deployed, scaled, perform rollbacks and identify issues. It also helps in saving money by utilizing resources. Docker-based applications can be seamlessly moved from local development machines to production deployments. You can use Docker for Microservices, Data Processing, Continuous Integration and Delivery, and Containers as a Service.

Docker allows you to run applications within isolated environments called containers. These containers provide a level of isolation, ensuring that the application inside the container operates independently of the host operating system and other containers. The application in a container may not have awareness of what's happening outside its isolated environment, enhancing security, consistency, and manageability. It's a powerful technology for packaging, distributing, and running applications with a high degree of predictability and isolation.

VIDEO REFERENCE

SUMMARY